BlockedIn

Overview

Website Finalized

June 5, 2010

Website finalized. Demo uploaded, results posted, conclusion added, project details added, proposal changed and updated.

Added Site Content

May 12, 2010

- Website requirements followed, multiple pages added to the site.

- Proposal info updated based on content from Midterm Presentation.

- Pictures added to summary and project details (block diagram).

- More specific descriptions added for what will be added later for conclusion of project.

Proposal

April 18, 2010

Proposal added to website.

Website

Apr 17, 2010

Project website is started.

Proposal

Summary

Our program recreates the game of Blokus, in which human(s) can play against

one or more AI agents. Our goal is to implement one AI agent to compete at

realistic levels of game play.

Introduction

The agent will play the game of BlockedIn against human player(s) and/or other intelligent agents. Multiple agents can be used, and intelligence will be varied. The player will select the number of AI opponents upon loading the game. The agents will utilize algorithms to determine the best place to position their game pieces.

We used three Reflex agents, and one Utility agent to create these AI agents. They are able to play at a solid level of game play against each other as well as against a human.

Game Rules

Each player (four players) is given an identical set of 21 unique tiles. Each tile is composed of a different number of squares ranging from 1 to 5, each of which is arranged in a unique pattern. The board is a square game board made up of 400 squares. The game is played in a clockwise manner, with each player placing one of their pieces on the board each turn. The piece however, can only be placed diagonally to the corner of an existing piece. If no pieces are on the board, each player places a tile of their choice in their corner of the board game. The game is finished when all four players are unable to place any more tiles on the board. The goal of the game is to place the most number of tiles on the board by the end of the game. Points are determined by the number of squares on the tiles placed on the board. An additional 15 points are awarded if all 21 pieces are placed on the board, and an additional 5 (or total of 20) are awarded if the last piece is the single square piece. The player with the most points wins.

PAGE Description

Percepts

- Pieces remaining, Size of pieces remaining, Open spaces on board, Available positions for piece placement, Location of opponent pieces on the board.

Actions

- chooseBlock()

- chooseSpace(block)

- rotateBlock(block)

- playBlock(space, block, orientation)

Goal

- To play as many of the 21 pieces as possible within the restraints of the game, focusing on minimizing the number of individual tiles left not played. The AI goal is to find piece that contains the most tiles, maximizes the amount of playable spaces on the board, and blocks an opponent’s possible moves.

Environment

- 2D graphical environment consisting of a grid of tiles representing the BlockedIn board, the tiles currently on the board (initially clear), four players (distinguished by color), and the tiles remaining (initially with 21).

Algorithms and Functions

chooseBlock()

Uses other functions to determine best block and space location of that

block

pieceWeight - # of blocks in piece

chooseSpace(block)

Finds the best placement for the chosen block

blockingWeight - # opposition corners blocked

creationWeight - # friendly corners created

rotateBlock(block)

Determines orientation of the piece

OrientationValue - value (0-7) of piece orientation

playBlock(block) -- place piece on board (action)

Milestones

- 4/09 - Preliminary research

- 4/19 - Developing the graphic environment

- 4/30 - Basic game play (without AI)

- 5/03 - Midterm Project Presentation

- 5/21 - Implementation of AI

- 5/28 - Completion of project

- 6/02 - Final Project Presentation

Team

Christina Forney

cforney@calpoly.edu website creation/design/publishing Christina AI

Mike Sudolsky

mike.sudolsky@gmail.com All graphics, GLUI, etc. (he is amazing!) Mike AI

Tommy Brown

tfbrown13@yahoo.com SVN creation Tommy AI

Robin Usher

rjusher@calpoly.edu Robin AI

Project Details

Program Details

Our program uses four versions of AI:

Mike’s AI

is a simple strategy that places a piece where there is the most room (either vertically or horizontally). To find a space: finds the biggest horizontal and vertical spaces, then chooses the largest of the two. To find a block: randomly chooses 5 blocks, choosing the largest block that fits in the chosen space. This is a reflex agent.

Christina’s AI

uses a point scoring system to find the “best” spot to play and the “best” piece to play there, for each round. Saves all diagonal coordinates, then looks at every diagonal spot, trying every unplaced piece at this spot, in every rotation and flip combination. Points are assigned based on the “goodness” of the spot, piece, rotation, and flip combination. The best score is the combination that is used. This is a Utility Agent.

Tommy’s AI

tries to block the opponents while using the largest possible piece. Finds all open diagonals, then finds all opponents diagonals. Chooses the opponent within 3 spaces, and stores this value. It then finds the largest piece from a random set of 10 pieces. This piece is attempted to flip to block the opponent, if it is not possible, it finds a way to situate the largest piece.

Robin’s AI

moves toward the center of the board. Choosing a spot: Looks for a spot with enough space to fit its largest remaining piece. If there is more than one, it selects the spot closest to the center of the board. If there are none, it selects the largest space available. Choosing a piece: Has a preset weighting of pieces based mostly on their length and how difficult they are to place. Selects the top five remaining pieces based on this weighting each turn. Randomly picks one of these five and tries to play it. This is a Reflex Agent.

Block Diagram

Description of block diagram here.

Results

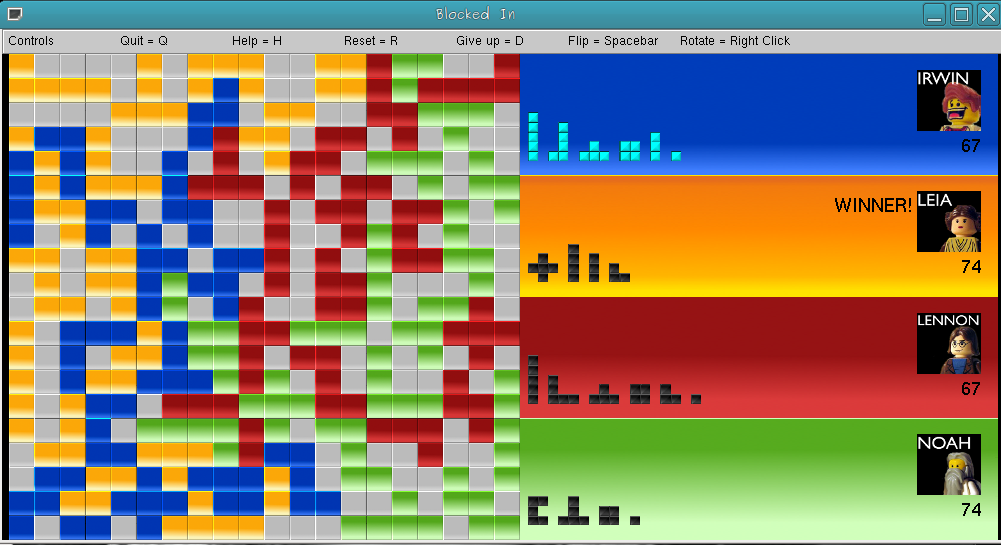

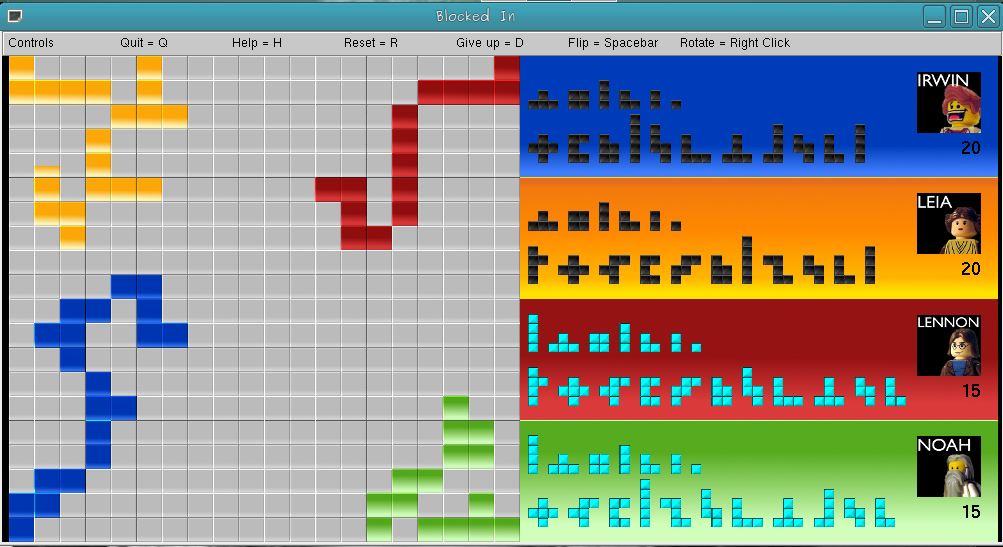

Summary

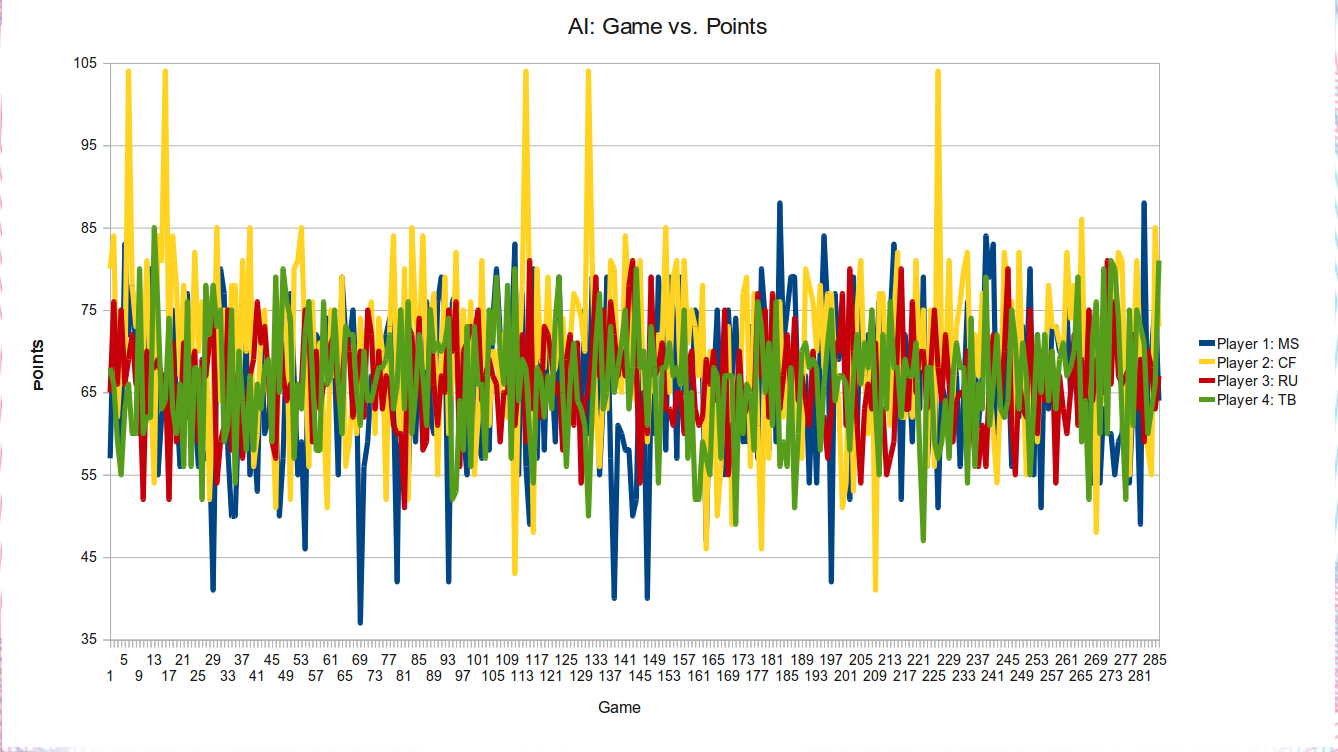

Even though the results varied turn to turn, there was an overall trend of AI ability. In general, Christina’s agent had the most success. The other three were very close in their average score. Christina’s agent was also the only agent to ever be able to place all pieces on the board. Turn by turn, the results were unpredictible, because each player’s actions depended on the plays of the other players.

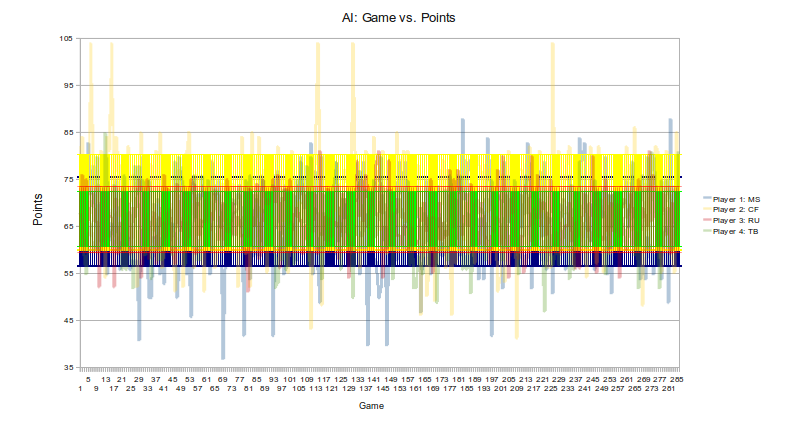

Game vs. Points

This a graph of 286 games, and the point values for each agent

at that game. This shows an overall result trend for many games.

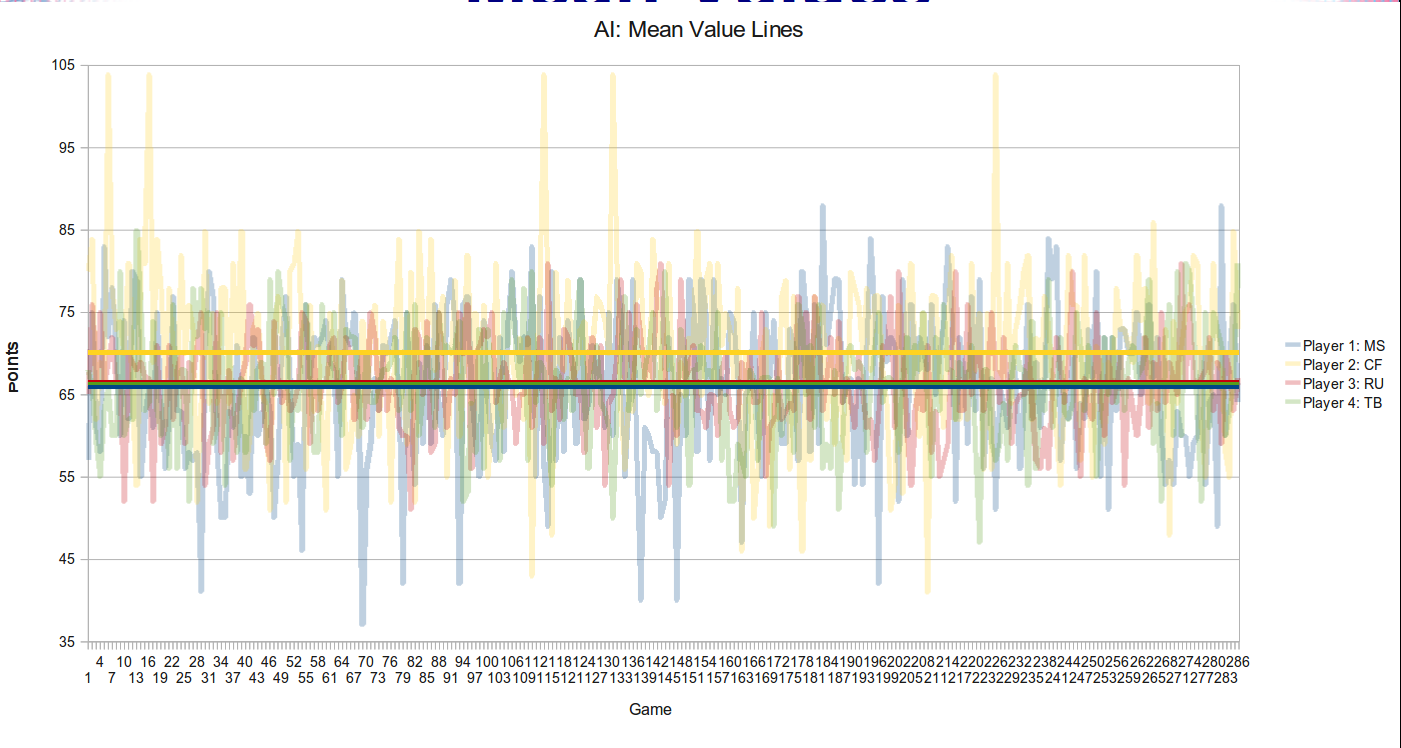

Mean Values

This graph has an overlay of the mean values for each player.

This shows how well the agents did overall on average.

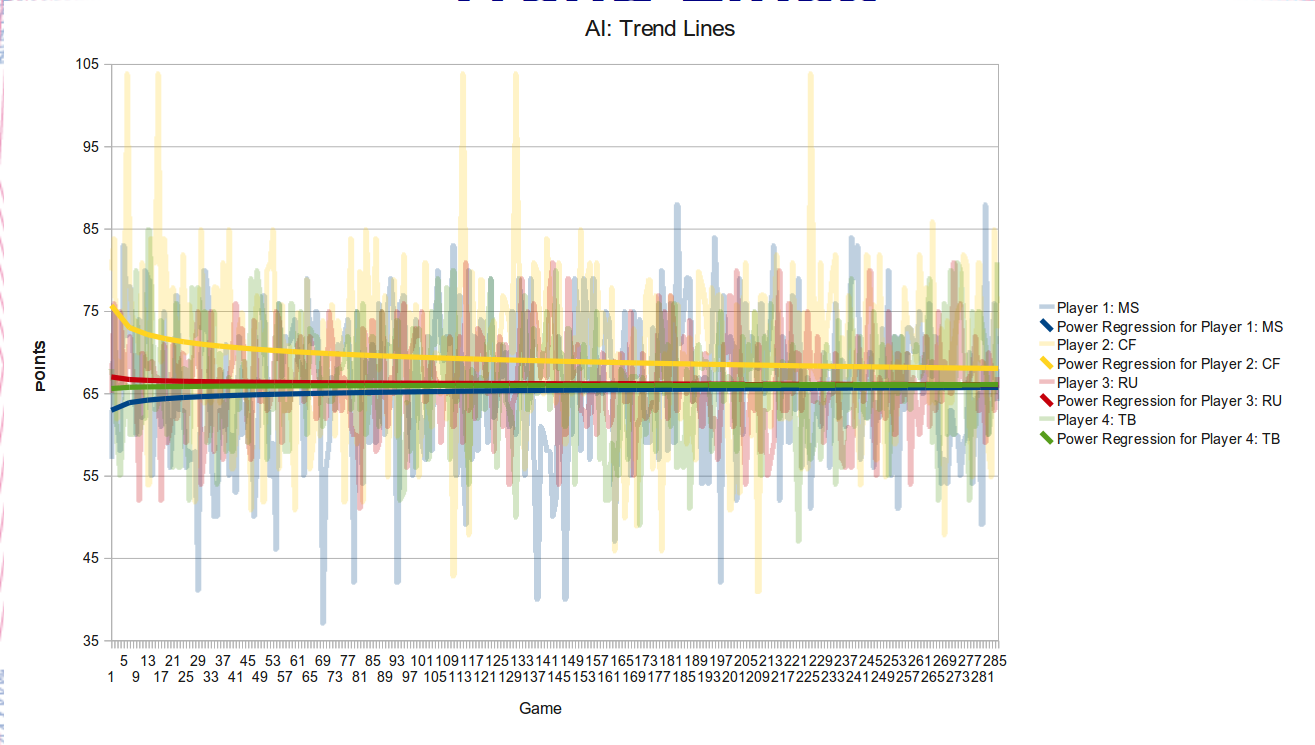

Trend Lines

This graph shows an overlay of the trend lines. As more games are played the agents tend to move toward a single level.

Standard Deviation

This graph shows an overlay of the standard deviations of the games played.

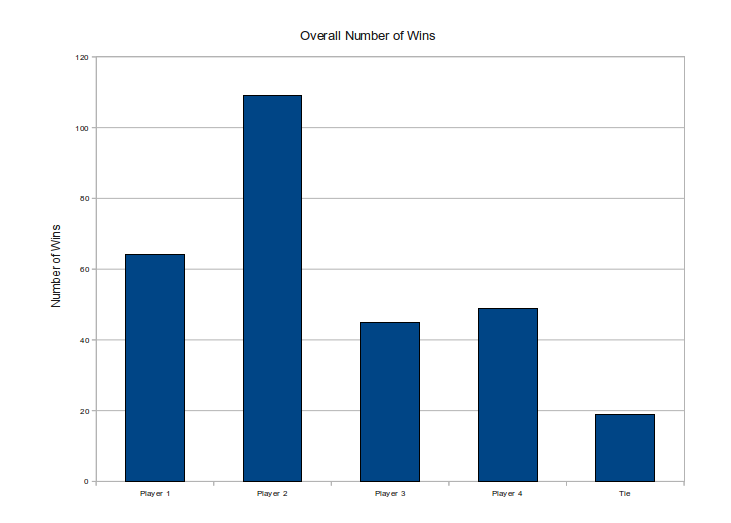

Overall Number of Wins

This is a bar chart of the overall number of wins by each AI agent.

AI agent 2 (yellow) won 109 out of 286 games or 38%, agent 1 (blue) won 64

games or 22%, agent 4 (green) won 49 games or 17%, agent 3 (red) won 45 games

or 16%, and there were 19 ties or 7%.

d

Demo

Download the game as a Zip File

Unpack the folder

open a terminal

cd to the BlockedIn folder

To Compile: make

To Run: ./BlockedIn

Conclusion

Overall, our AI provided a competitive partner for various levels of game play. There are four AI agents for the user to choose to play against. The agent opponents can all be the same or they can be different. This game could be distributed as a simple computer game for playing at leisure.

The AI we implemented worked well for our game implementation. However, this AI is useful only for this application because it is critically dependant upon the individual strategy of this game. The AI could be used for a modified version of the game, however it does not go beyond the scope of this game.

Future steps include modifying the game to incorporate other actions and/or strategies. For example, to be able to remove and/or move an opponents piece, would dramatically change the game and how the strategy was programmed. An additional improvement for the current game strategy is to implement a learning agent. This would be especially interesting to see the changes in game play as the agent learned more about how to play. Unfortunately, with a small group and a short time frame this agent type was well beyond our scope and ability.